AI has shifted enterprise marketers’ focus from content velocity to visibility. McKinsey estimates brands could see traffic declines from 20% to 50% as more people get answers from AI search tools like ChatGPT and Perplexity rather than clicking through search results to a website.

You’ll still need to produce blog posts, ebooks, and other assets to help educate customers, but now it takes more work to make sure they see them. Much like investigative reporters, AI search tools don’t provide details on how they choose their sources, or even reveal all of them. The emphasis is on digging up details and providing searchers a succinct story that answers a conversational prompt.

Brands can’t leave it up to chance whether they appear in AI search results or not. The domain expertise and proprietary data behind the best marketing content represent critical intellectual property (IP). Being cited can affect everything from your brand authority to whether prospects go deeper to visit your site and convert into customers.

In the absence of AI companies educating the market on how their models operate, generative answer optimization (GEO) or answer engine optimization (AEO) involves an ongoing learning curve. Here’s the latest:

The current state of AI search citations

A rise in zero-click search behaviors has everybody zeroing in on the art of getting cited: 90% of organizations fear Google’s AI overviews, its AI Mode, and other tools’ large language models (LLMs) are making marketing content less visible online.

Compounding the problem is that there are no universal standards in AI search citations. The variety your customer will see includes:

Inline source attribution linked to claims

Perplexity presents answers more like you’d expect from a grad student handing in an essay. It’s fairly easy to scan the basis for what it puts into its answers, such as brand websites. This is as close to ideal for enterprise marketing as you’re going to get.

Limited explicit citations

ChatGPT put generative AI on the map, but it doesn’t always lay out the exact route it takes to bring answers your way. You’ll see free-form responses with a few citations embedded in the text. If you’re using a paid version, there’s a “Sources” view that offers a more granular look at the content that fuels its responses.

Source clustering

Google Gemini and Anthropic Claude resemble that know-it-all friend who, if you press them on how they learned something, will suggest “studies show” or “people say.” They use partial citation frameworks that group sources into clusters rather than full attribution, making it even less enticing to fully fact-check an answer.

What’s common about all these AI search tools is the difference between the training data used to develop their models and the runtime actions and data that influence their output citation behavior.

AI search tools may be transparent about how they’re trying to limit bias in the training data, but they need to be equally vigilant in how output citation behavior verifies the accuracy and provenance of individual facts and claims.

As more business buyers use AI search to investigate new products and services, there will likely be a greater demand for source citations, at least within premium versions. In the meantime, the good news is that product content makes up between 46% and 70% of all cited sources. Those “best of” blog roundups, vendor comparisons, and product deep dives continue to pay off.

Why citations are inconsistent — and what that means

The truth is, leaning on AI search tools as a single source of truth is risky. Among those who use it at work, 66% rely on AI output without checking its accuracy.

It’s not just the speed and convenience these tools offer that makes checking their sources feel unnecessary. There are considerable technical and legal challenges in tracing data lineage through large models. Not all of us are experts in copyright and fair use considerations, and turning to the legal team could set off an alarm.

You’d also have to go through this exercise for each AI search tool you use. They are all backed by companies who have different business models that influence their citation practices. Google has obviously aligned its model to align with its core search service. Others have more proprietary approaches because they are also helping users generate content, not just look up answers.

There’s an unfortunate trade-off in user experience as a result: your customers will get concise answers to a question or prompt, but without transparent sourcing.

Implications for enterprise marketers

Imagine if, instead of a top chef sharing their recipe, they just made the meal for you. That’s how AI search works. As a result, successful marketing is shifting from being primarily about attracting referral traffic to focusing on the hope of being referred to at all. You want customers and prospects to see the ingredients you’ve provided for the information they’re consuming.

When AI search tools don’t make it clear where their information is coming from, it’s not just a disservice to searchers. It means that the brands whose content is being scraped don’t receive proper credit, and the results may not accurately reflect their brand.

Inside marketing teams, this represents a sea change from basing SEO strategies on identifying the right keywords and weaving them into content. You need to publish content that is valuable, so authoritative that it gets woven into the AI search tool’s response.

Tracking and measuring AI visibility

There are a growing number of AI search visibility tools, but you may not need to bolt on another point product to your tech stack just yet.

It’s more important to have a solid analytics platform that can offer a holistic view of content performance, where more specific AI visibility features will be added over time.

As they explore new revenue streams, AI search tool providers may offer their own services to track visibility and partnership opportunities to boost their presence in AI search citations.

You can also do your own AI visibility audit today, simply by doing what the best marketers have always done: look at the world through their audience’s eyes. Here’s an example:

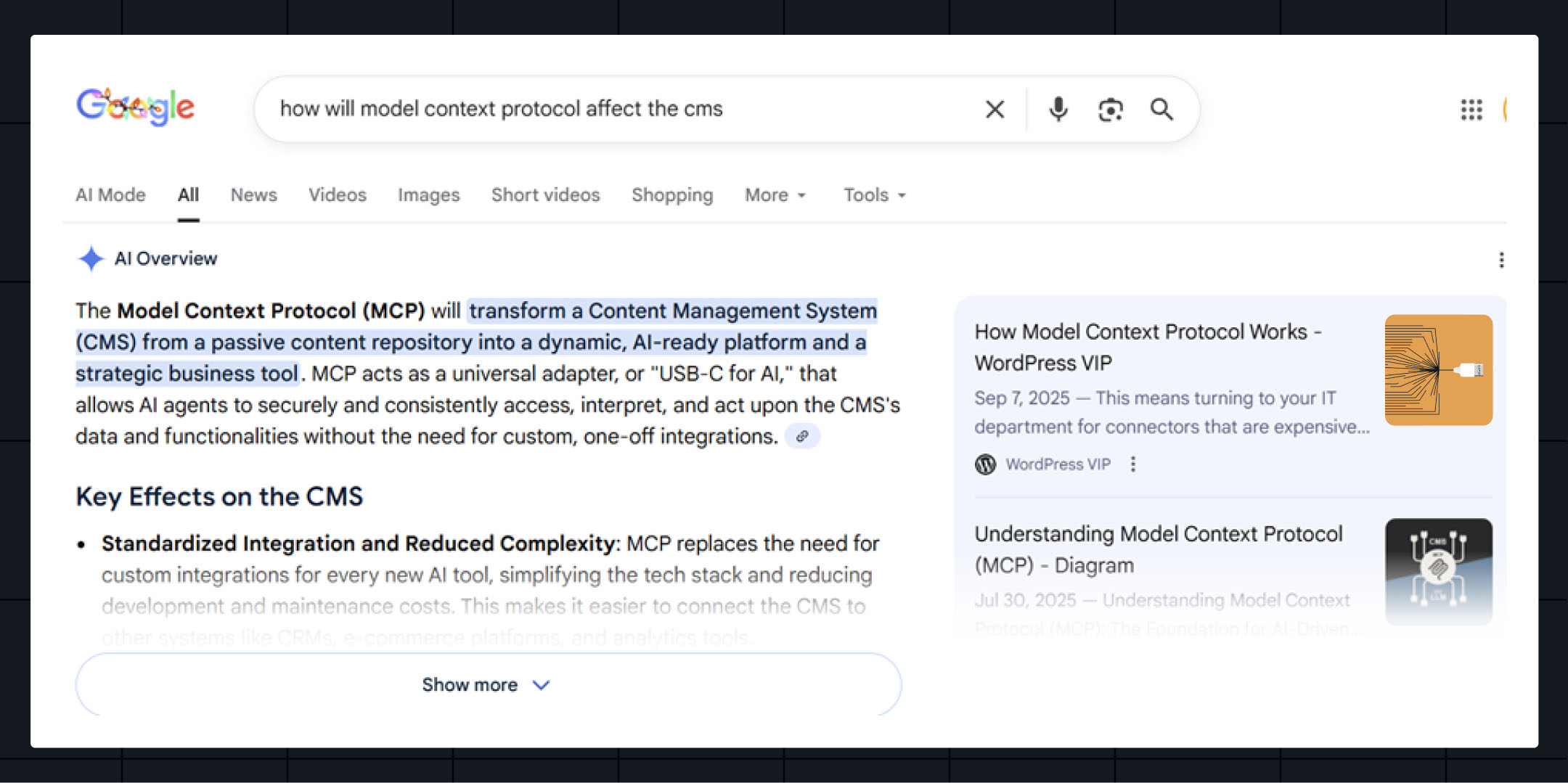

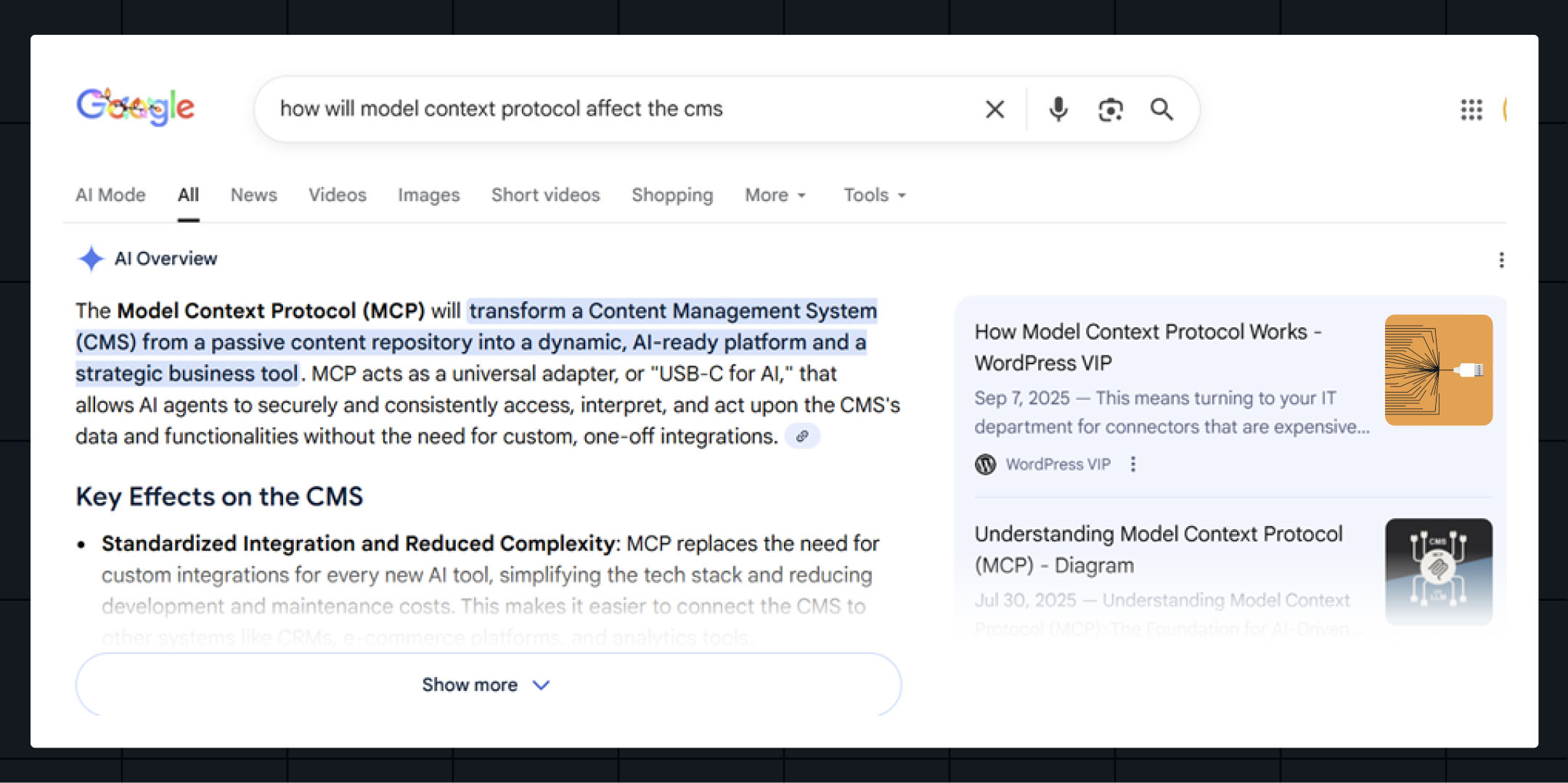

- Think about a common issue facing your customers. It could be a threat, an opportunity, or a mix of the two. Here at WordPress VIP, for instance, we know that marketers are becoming more AI-focused and learning how standards like model context protocol (MCP) are making it easier to connect AI solutions to their tech stack. As that happens, they might wonder how it will have an impact on their content management system (CMS).

- Use an AI search tool and ask a conversational-style question you think your audience might like. In this case, we used “How will model context protocol affect the CMS?”

- Analyze the response and look for citations. In this case, our blog post was the key source cited in the Google AI overview. This is what you want to see.

Of course, this citation could get pushed down or buried in favor of another source over time. Much like SEO, getting cited by AI search tools is an ongoing work in process. This was also a fairly specific query, where we knew we had a piece of content recently published. You should conduct similar tests based on a range of queries your customers might pose.

This may not be easy to do at scale without automation, so prioritize the questions that could drive to the products, services, and subject areas that you feel are top of mind among your most important audience segments.

Strategic actions for enterprise marketing teams

Beyond an AI visibility audit, getting cited will require changing the way your team works and how your organization engages online.

Start with what always matters, which is creating content where claims and facts are fully verifiable, especially if you’re using first-party data you’ve collected.

As that happens, connect with your legal and communications teams to determine what you publish, considering how it might be scraped and cited.

It might also make sense to approach AI search providers about a more formal partnership to feature your content as opportunities become available.

Also, continue to invest in building owned channels like your website, and have a CMS like WordPress VIP in place to assist with content architecture, streamlined content production, and collaboration. As the Content Marketing Institute put it, AI can’t kill websites that build connection.

For more actionable advice on this, we’ve created a free in-depth guide on generative engine optimization (GEO), chock-full of best practices you can apply and share with colleagues.

The road ahead: toward standardization and fair attribution

We’re still at the beginning of the AI-native web era, and the way AI search tools cite content is bound to evolve.

Some of this will be driven by the search tool providers. There are also growing movements like the Content Authenticity Initiative that are pushing for greater transparency to build trust in the way AI interacts with content.

The best enterprise marketers will find a way to get noticed by AI search tools, such as they did by traditional search engines. And successfully offering answers through generative engines will always depend on the quality of the sources it draws upon.

Frequently asked questions

How do AI search tools choose what sources to cite?

Tools like ChatGPT, Perplexity, and Google’s AI Overviews all work differently but tend to scrape and cite content that is high-quality, verifiable, and well-structured.

How can I get our brand featured in AI search answers?

There is no formal, guaranteed process for getting cited in AI search. Much like SEO, it requires ongoing work to build online authority and produce content that aligns with what people are asking tools powered by generative engines.

Does ChatGPT cite sources differently from Perplexity?

Yes, ChatGPT tends to offer limited text citations, while Perplexity offers inline source attribution linked to claims. Tools like Anthropic’s Claude and Google’s Gemini group sources into clusters for those who want to dig deeper.

Shane Schick

Founder, 360 Magazine

Shane Schick is a longtime technology journalist serving business leaders ranging from CIOs and CMOs to CEOs. His work has appeared in Yahoo Finance, the Globe & Mail and many other publications. Shane is currently the founder of a customer experience design publication called 360 Magazine. He lives in Toronto.